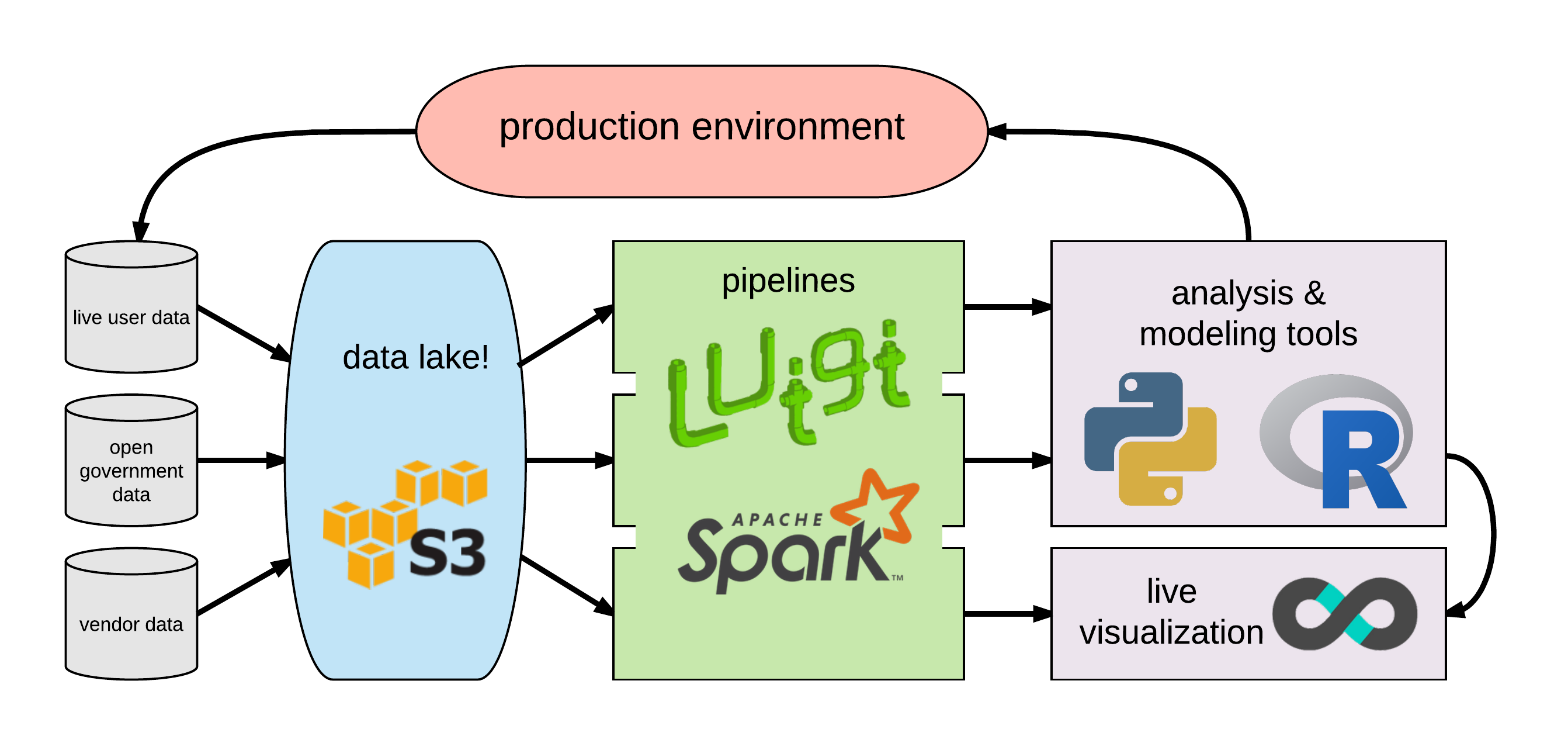

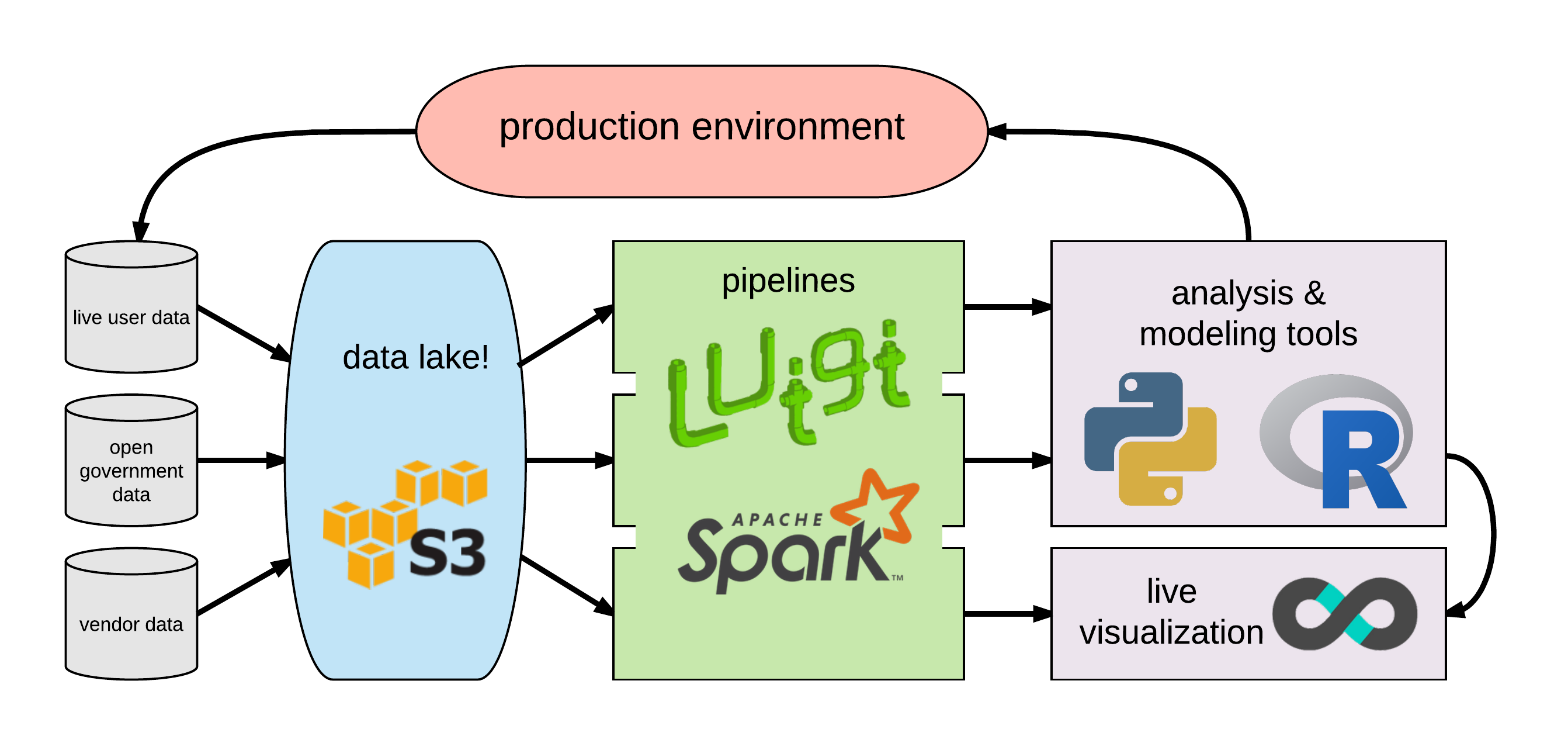

In automotive manufacturing, building a holistic view of the production process necessitates integrating heterogeneous data from sensors, quality control systems, supply chain management software, and sales databases into a unified data platform. This data platform must support predictive modeling, advanced analytics, and process optimization in a scalable, reproducible manner, decoupled from live production systems.

Data originates from diverse sources: IoT sensor readings, QC metrics, vendor EDI feeds, CRM databases. The objectives are to enable production analytics, validate manufacturing processes at scale, and drive data-driven optimization.

Monolithic architectures with siloed databases and desktop-based analysis tools are inadequate. A paradigm shift is required to construct a scalable, elastic data pipeline that transforms raw manufacturing data into actionable insights and models.

Key architectural components:

A data lake is a centralized repository that allows data to be stored in its natural/raw format. This schema-on-read approach provides flexibility to ingest new manufacturing data sources without upfront schema design.

Amazon S3 offers a highly durable, scalable, and cost-effective storage layer for a data lake. However, robust data governance, metadata management, and data cataloging are essential to prevent the lake from turning into a "data swamp".

Complex data transformations and integration workloads require choreographed execution of interdependent tasks. The pipeline should be modeled as a directed acyclic graph (DAG), with each vertex representing an idempotent ETL operation.

Workflow orchestration frameworks like Luigi (open-sourced by Spotify) allow defining such task graphs as Python code. Luigi pipelines are intrinsically testable, versionable, and executable in a distributed manner.

Overcoming the limitations of in-memory processing requires embracing parallel and out-of-core computing paradigms. Dask provides an abstraction layer over Python data structures enabling out-of-core algorithms and lazy execution.

Apache Spark is a unified analytics engine supporting batch, streaming, and interactive workloads. Spark's resilient distributed datasets (RDDs) and DataFrames facilitate expressing parallelizable operations on structured and unstructured data at petabyte scale.

Dask is conducive for iterative exploratory analysis by data scientists, while Spark is optimized for ETL and machine learning pipelines in production.

Browser-based interactive dashboards democratize data, making it accessible to non-technical stakeholders. Apache Superset (incubated at Airbnb) and Metabase offer SQL-based charting and drill-downs without needing to write code.

These BI tools can connect to analytical databases like AWS Redshift or Snowflake, populated by scheduled ETL jobs, to power near real-time operational reports and executive dashboards.

Collaborative workflows on common datasets yield maximum organizational impact. Centralized storage and compute eliminates data silos and inconsistencies.

Deploying the data lake on S3, with data pipelines and analytical workloads running on AWS EC2 or EMR clusters, streamlines infrastructure management and security. The entire solution can be built on open-source technologies, with cloud services solely used for storage and computing.